If you’re like me, you might find that working with JSON data can often feel like trying to untangle a ball of wool. Nested structures are common in JSON files and can become particularly convoluted when they contain multiple levels of nested data. In the past, I’ve spent hours creating CTEs in SQL to solve this problem, only to wind up with a complex and hyper-specialized query that often didn’t even create exactly the output I was hoping for. Thankfully, with the help of dbt, this process can be simplified. In this tutorial, we will take you through a hands-on guide to turn complex nested JSON files into accessible, flattened tables ready for analysis!

The Challenge:

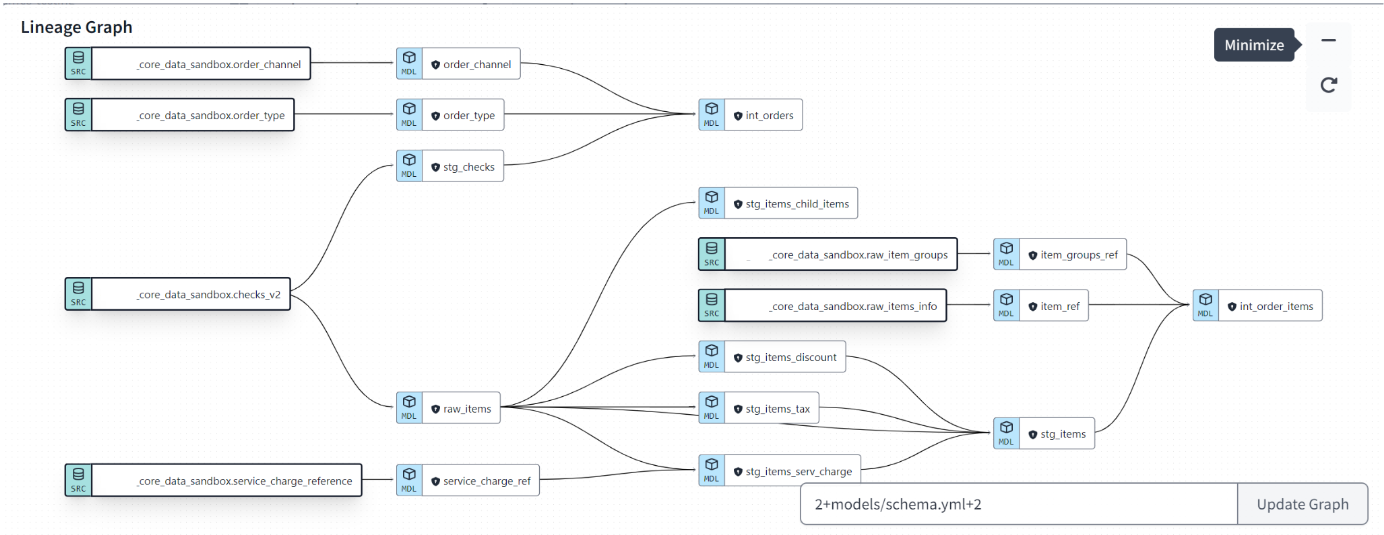

Imagine you have a JSON file, sourced from an API response, that logs customer transactions. Each transaction record is dense — with nested data detailing items, service charges, discounts, and more. Your goal is to structure this data into a relational format within BigQuery — preserving the intricate details without losing the overarching context of each transaction. You could modify your python script to unnest these components into their own individual dataframes, but you’d prefer to have the progression from raw to structured data take place in a manner that is easy to audit and to trace in your Data Architecture. This is a perfect use-case for dbt.

The Solution — Step-by-Step Unnesting with dbt and BigQuery:

Step 1 — Create a Raw Data Table BigQuery: Begin by creating a new table in your database that contains the raw JSON response. Don’t worry about renaming columns or even fixing data types at this point — all of that can be handled within dbt.

Step 2— Set Up Your dbt Model: Next, create a models folder within your dbt project that you can begin working with. Within that folder, create a YAML file which you will use to tell dbt which tables you’ll need to pull in from the dataset. For this example, we’ll be working with the raw_orders table

Step 3 — Extract JSON Arrays: Using BigQuery’s JSON_EXTRACT_ARRAY function, you can now begin to extract the nested arrays within your JSON column. This step converts the JSON strings into a format that can be expanded into separate rows. Here we will be specifically unnesting the data within the ‘Items’ column:

WITH base AS (

SELECT

order_id,

JSON_EXTRACT(item, '$') AS item_json

FROM

{{ source('your_dataset', 'raw_orders') }}

),

Step 4— Unnest the JSON Data: After unnesting the Items column, you can proceed to extract the details of each individual item. BigQuery’s JSON_EXTRACT_SCALAR function is perfect for this, as it allows us to pull out scalar values from the JSON object.

unnested_items AS (

SELECT

base.order_id,

JSON_EXTRACT_SCALAR(item, '$.item_id') AS item_id,

JSON_EXTRACT_SCALAR(item, '$.quantity') AS quantity,

JSON_EXTRACT_SCALAR(item, '$.price') AS individual_item_price,

JSON_EXTRACT(item, '$.service_charge') AS service_charge_nested,

JSON_EXTRACT(item, '$.discount') AS discount_nested

FROM

base,

UNNEST(JSON_EXTRACT_ARRAY(base.item_json, '$')) AS item

)

SELECT * FROM unnested_items

With this structure, you have now prepared each row to represent an individual item from the original nested JSON, with its identification and transactional details neatly extracted into separate columns.

Step 5— Run Your dbt Project and Build out your DAG: After testing to ensure the above works as intended for your use case, you can execute the dbt run command to push this new table to your BigQuery instance.

Other tips to keep in mind

- Make Sure Your Nested JSON Data is Actually in a Readable JSON Format: You might need to add a few additional lines of code to ensure that the nested JSON is formatted properly.

- Be Intentional About How Far You Want the Unnesting to Go: In working with these JSON responses, you may run into instances with recursively nested child items. Investigate if they are adding value to your analysis.

- Brush up on your dbt documentation: If you’re new to dbt, take a moment to brush up on the tool. This link is a great place to start!

The combination of dbt and BigQuery is a powerful toolkit for handling JSON data. By following this guide, you can transform even the most nested JSON structures into a well-organized database schema.

For further guidance, or if you have specific challenges with your JSON data transformations, feel free to reach out to us at nick@southshore.llc and james@southshore.llc. You can also find us at our website at southshore.llc.